GLM-4.5: Free Chinese AI Giant with Agentic Abilities

BEIJING – In a significant move shaking up the AI landscape, Chinese startup Zhipu AI, considered one of the industry’s rising “tigers,” has announced the launch of a new family of open-source models, led by GLM-4.5 and its lighter counterpart, GLM-4.5-Air.

The announcement arrives as the pace of innovation among major tech companies accelerates, with each contender striving to deliver more powerful and efficient models.

According to an official company statement, the new model is specifically designed for intelligent agent applications: systems capable of independently reasoning through and executing complex tasks.

A Unique Architecture for Stable Performance

Deviating from the prevailing trend of massively scaling up model sizes, Zhipu has opted for a different path. GLM-4.5 is built on a Mixture-of-Experts (MoE) architecture but with a distinct philosophy.

Instead of packing the model with a vast number of experts, the company reduced the width and increased the depth.

In other words, it relies on more layers with less distraction.

According to its developers, this design has yielded superior logical reasoning and more stable behavior when handling long-context tasks or multi-turn tool calls.

The model operates in two modes: a “thinking” mode for tackling complex, tool-involved problems, and a direct mode for quick, straightforward answers.

In a key architectural detail, GLM-4.5 features a massive 355 billion total parameters, but its intelligent design means only 32 billion are active for any given task.

Its smaller sibling, GLM-4.5-Air, has 106 billion total parameters with just 12 billion active, offering a balance of power and efficiency.

GLM-4.5’s Performance in a Competitive Field

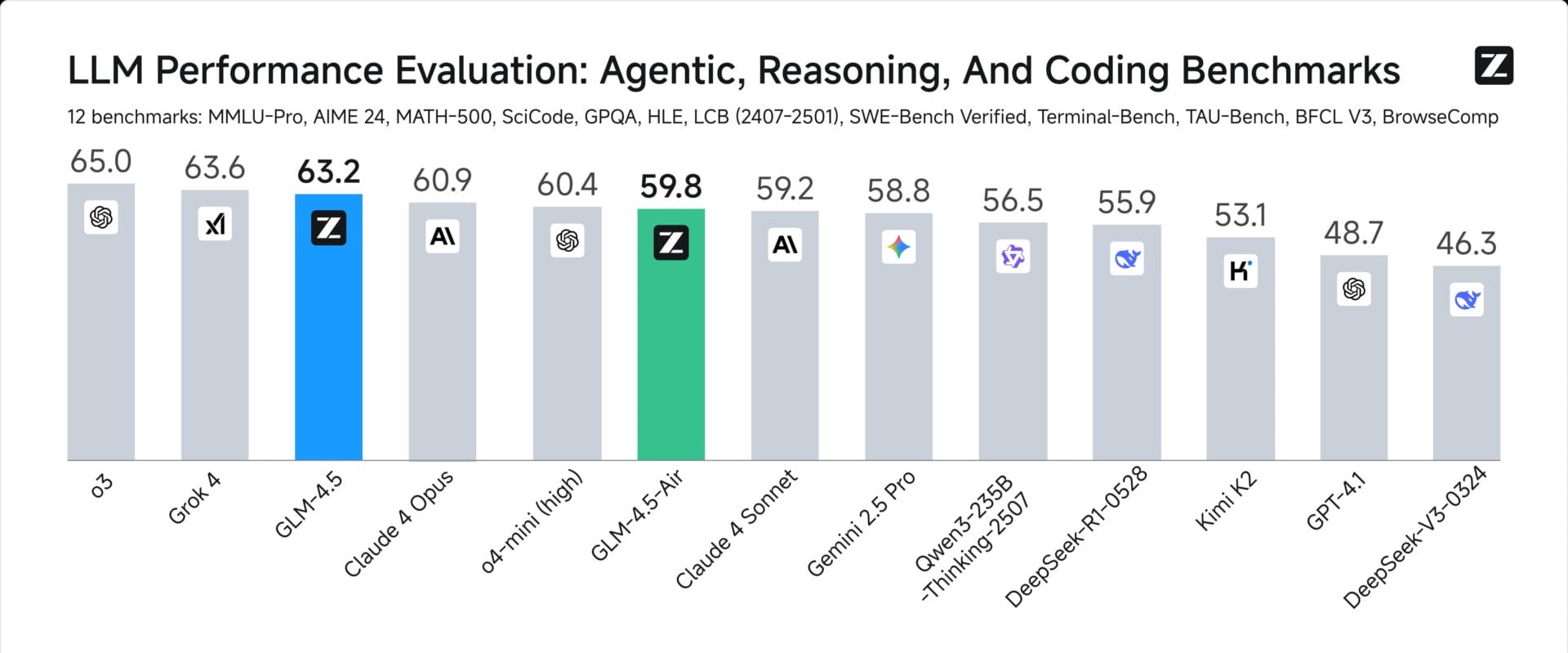

On the performance front, GLM-4.5 establishes itself as a top-tier contender.

Across 12 rigorous benchmarks covering coding, reasoning, and agentic capabilities, the model ranked third globally, just behind models from OpenAI and Grok.

Perhaps its most standout feature is its tool-use efficiency, where it achieved a 90.6% success rate.

A figure like this places it ahead of well-known competitors such as Claude 4 Sonnet, Kimi-K2, and Qwen3, making it a reliable choice for developers integrating models with external applications.

When it comes to coding, the picture is more nuanced. On the SWE-bench Verified metric, the main model scored 64.2.

For Terminal-Bench, it reached 37.5. While this performance surpasses some established models, it still trails more specialized ones like Claude 4 Opus in certain tests.

Executing AI Agent Tasks

The secret to GLM-4.5’s strength lies in its “Agent-native” architecture.

Unlike other models, core capabilities like complex planning, task decomposition, and tool use are integrated directly into the model’s foundation, rather than being bolted on as afterthoughts.

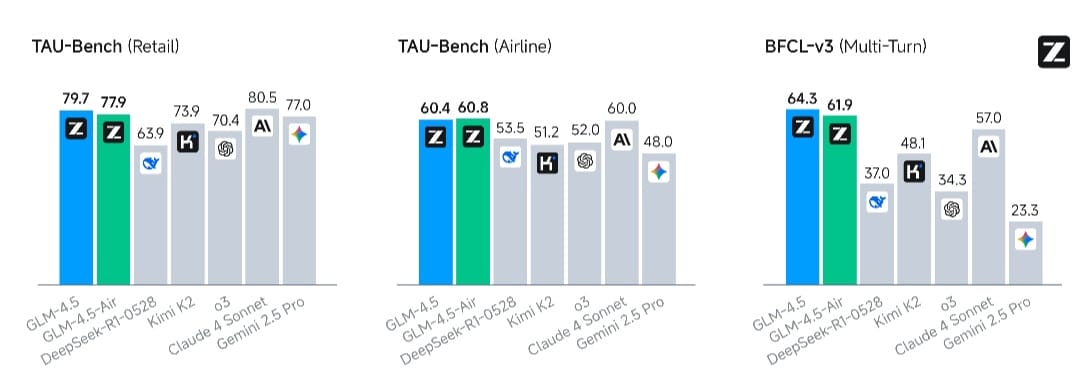

This structure translates into tangible performance. In complex web-browsing tests (BrowseComp), the model achieved a 26.4% success rate, a number that clearly surpasses its rival Claude-4-Opus, which scored 18.8%.

Regarding tool handling and function calling, results from platforms like τ-bench and BFCL-v3 show GLM-4.5’s performance matching that of powerful models like Claude 4 Sonnet, proving its merit in executing complex, real-world tasks.

Innovation in Training Methods

Behind this strong performance is an innovative training process the company has dubbed “slime” (Scalable Large-scale In-memory Mixed-precision Execution).

It’s a hybrid training system that combines synchronous and asynchronous methods and decouples rollouts from training to prevent bottlenecks. This setup has allowed the model to be trained efficiently on realistic and complex tasks.

It is worth noting that Zhipu AI has garnered significant attention, particularly after OpenAI acknowledged the Chinese company’s notable progress in securing government contracts across several regions last June.

Reports indicate that as of July, China had released 1,509 large language models, ranking first globally in the heated race toward the future of AI.

The company invites everyone to try the new model for free on its interactive platform.