Xiaomi Enters the AI Race Strongly with the MiMo-7B-RL Model

Xiaomi has unveiled its open-source model, MiMo-7B-RL, a large language model specialized in mathematical reasoning tasks and code generation.

The new model comes with a reasonable number of parameters, around 7 billion only.

Despite its small size, the company indicated it achieves performance comparable to much larger models like OpenAI’s o1-mini and Alibaba’s Qwen-32B.

Training Quality: The Key to MiMo’s Performance

Xiaomi believes the key to success in AI models lies not just in size, but in the quality of core training.

For this reason, MiMo’s initial training phase underwent precise adjustments to increase the density of “reasoning patterns” within the data used.

This process included developing tools for extracting technical and programming texts, and using multi-dimensional filters to focus data content on logical problems.

The company also innovated a three-stage data strategy relying on around 25 trillion training tokens, enhancing the model’s ability to understand complex relationships.

Additionally, a technique called Multiple-Token Prediction was applied to accelerate the response generation process and improve contextual understanding quality.

Reinforcement Learning Phase for Increased Accuracy and Efficiency

After building the base MiMo-7B model, Xiaomi proceeded to the reinforcement learning (RL) training phase, utilizing a selected set of 130,000 math and programming problems.

Each problem was verified via tests or numerical validation methods to ensure objective results.

To avoid the issues of weak rewards in complex code generation, Xiaomi developed a unique system granting the model partial scores upon passing sub-tests within each problem. This allows the model gradual understanding and more stable learning.

The company also relied on advanced infrastructure known as the “Seamless Rollout Engine.” This system reduced downtime on GPUs, achieving a training acceleration of 2.29 times and a verification speed improvement of 1.96 times.

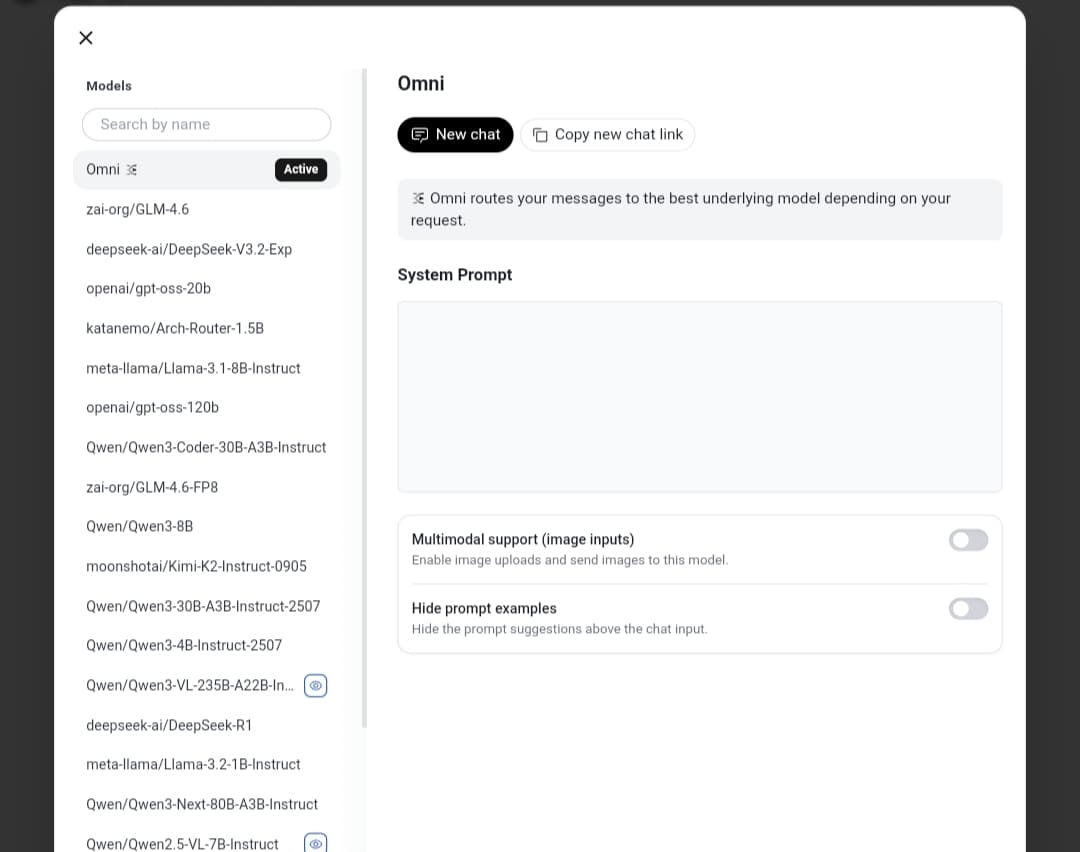

Multiple versions

Xiaomi has released four versions within the MiMo-7B series:

- MiMo-7B-Base: The base model with strong initial reasoning capabilities

- MiMo-7B-RL-Zero: An RL-enhanced version directly from the base

- MiMo-7B-SF: A version fine-tuned with Supervised Fine-tuning

- MiMo-7B-RL: The final high-performance release

According to tests, MiMo-7B-RL recorded excellent figures:

| Test | Success Rate |

|---|---|

| Mathematics | |

| MATH-500 | 95.8% |

| AIME 2024 | 68.2% |

| AIME 2025 | 55.4% |

| Programming | |

| LiveCodeBench v5 | 57.8% |

| LiveCodeBench v6 | 49.3% |

These results demonstrate the model’s superiority over many large competitors despite its smaller size, positioning it among the best models in the specialized code and math categories.

Available to Everyone via Hugging Face

Xiaomi has made the MiMo models available on the Hugging Face platform with detailed operating instructions.

The model can be easily used via the Transformers library or Xiaomi’s custom vLLM version, with support for the Multiple-Token Prediction feature to accelerate inference.

Access the Model

https://huggingface.co/XiaomiMiMo