Google Showcases Gemini Capabilities with Interactive Web App

Google recently unveiled a series of significant developments for its intelligent assistant, Gemini, offering users a glimpse into the future of AI interaction.

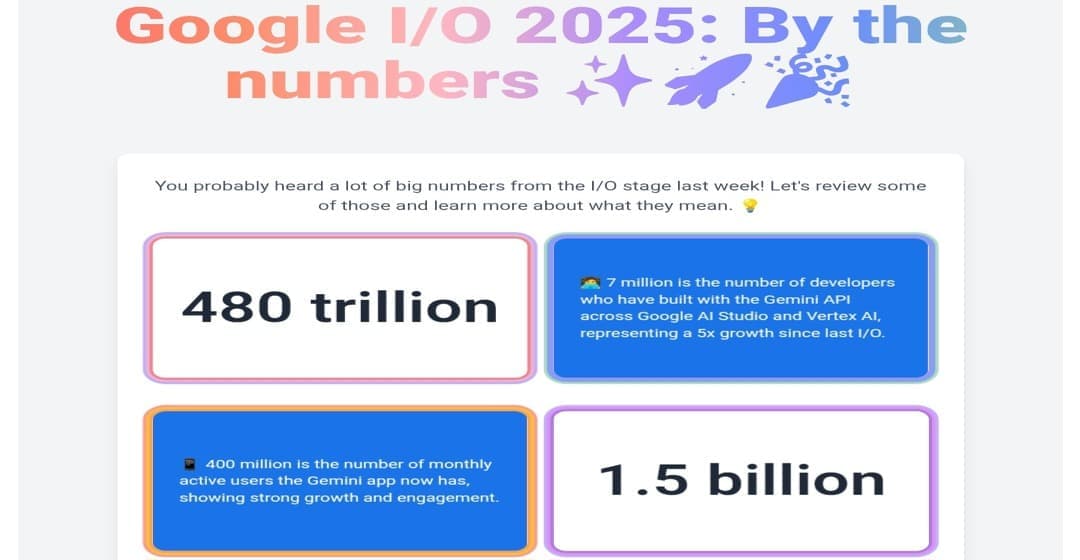

In a notable move, the company introduced an interactive web application, developed almost entirely by Gemini, to showcase the key announcements from the I/O 2025 developer conference in an innovative and engaging style.

This initiative not only highlights the conference’s main takeaways but also serves as a practical demonstration of Gemini’s capabilities as a programming and application development tool.

According to Google, Gemini was leveraged to analyze keynote transcripts from the conference, extracting important figures and information and providing explanations for them.

Following this, developers only needed “a few tweaks to ensure accuracy,” as described by the company.

Users can now explore this interactive application, view the numbers and statistics from the conference, and even examine the code Gemini produced to build the application within the Canvas platform, Google’s AI-enhanced workspace.

Beyond simply reviewing previously announced information, the application carried an exclusive detail not mentioned during the main conference events.

One section of the app revealed that Veo 3, Google’s latest video generation model, was expanded to include 71 new countries as of May 24th.

Substantial Updates to Enhance the Gemini Experience

Apart from the conference’s web application, Gemini itself received significant upgrades, making it more powerful and capable of meeting user needs in various ways.

The Gemini Live feature, which enables real-time voice and visual interaction with the assistant via phone camera and screen sharing, is now available for free to all Android and iOS users.

Google reported that conversations via Gemini Live are five times longer compared to text-based interactions, reflecting user preference for this type of engagement to assist with tasks such as troubleshooting a broken device or getting personalized shopping advice.

In a related context, Google plans to integrate Gemini Live more deeply with its other applications such as Maps, Calendar, Tasks, and Keep, to facilitate daily plan management and information access.

In terms of creative content generation, Gemini now comes enhanced with the Imagen 4 model for image generation, which boasts high-quality images and better text rendering capabilities.

For video production, the Veo 3 model stands out as the first of its kind to natively support the creation of sound effects, background noises, and character dialogue based on simple text prompts.

This capability is currently available to Google AI Ultra plan subscribers in the United States, and can also be tested via the Google AI Studio platform.

Advanced Research Tools and Broad Programming Capabilities

On another front, tools like Deep Research and Canvas have received their most significant updates to date.

Deep Research now allows users to integrate their private data from PDFs and images with public data to generate comprehensive, customized reports, with future plans to integrate information from Google Drive and Gmail.

Canvas, Gemini’s creative workspace, has become even more powerful with Gemini 2.5 models, enabling the creation of interactive charts, quizzes, and audio summaries in 45 languages, in addition to its ability to translate complex ideas into efficient and accurate code rapidly.

For Chrome users, Google has begun rolling out Gemini directly within the browser for Pro and Ultra plan subscribers in the United States (for English speakers on Windows and Mac OS). The initial version allows users to ask Gemini questions to clarify complex information on any webpage or summarize its content.

Check out the latest AI updates from Google.