DeepSeek-R1-0528: DeepSeek Updates R1 & Unveils a Mini Version

DeepSeek, a prominent name in artificial intelligence technology, has marked a new stride in the global competition by announcing an update to its renowned R1 inference model.

This update, which the company described as a “minor upgrade,” comes packed with tangible improvements, according to DeepSeek’s statement on the Hugging Face platform for developers.

The move aims to bolster the model’s capabilities in deep thinking and complex task processing, in addition to launching a more efficient, smaller “distilled” version.

Access the Updated Model Page.

DeepSeek-R1-0528

The updated version of R1-codenamed R1-0528-brings a significant improvement in inference depth and reasoning capabilities.

DeepSeek reported that this new release reduced the occurrence of erroneous or misleading outputs, commonly known as “hallucinations,” by 45-50% in tasks such as rephrasing and summarization.

Furthermore, the model showcased enhanced capabilities in creative writing across various literary genres and frontend code generation, alongside improved role-playing performance.

Despite these advancements, a report indicated that the updated model, with its 685 billion parameters, might necessitate specialized hardware for efficient operation.

The model is available under the permissive MIT license, which allows for commercial use.

DeepSeek-R1-0528-Qwen3-8B

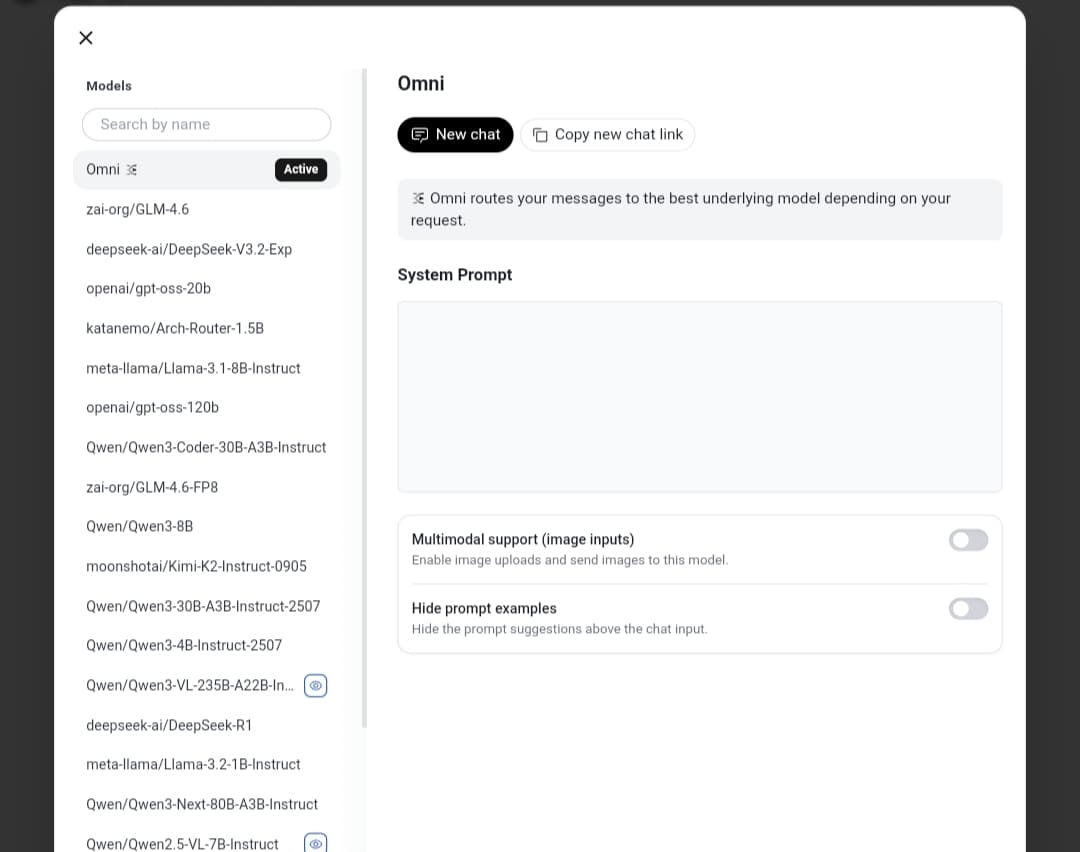

Parallel to the main update, DeepSeek unveiled a smaller, optimized version named DeepSeek-R1-0528-Qwen3-8B.

This smaller model was built upon the Qwen3-8B model, which Alibaba launched in May.

DeepSeek stated that this distilled model surpassed Google’s Gemini 2.5 Flash model in the challenging AIME 2025 math questions dataset.

Its performance also neared that of Microsoft’s recently released Phi 4 model in another math skills test.

These distilled models are designed to be less demanding in terms of computational resources.

The company clarified that it targeted academic research in inference models and industrial development focusing on small-sized models. This miniature model is also available under the MIT license.

DeepSeek-R1’s Success in the AI Market

The initial debut of the original R1 model last January came as a surprise that shook global technology markets.

The model demonstrated its ability to compete with, and even surpass, models developed by Western giants like OpenAI and Meta AI across several benchmark metrics, purportedly at a much lower cost.

According to reports, DeepSeek’s success overturned prevailing notions that U.S. export controls would impede China’s progress in AI.

This success also prompted other major Chinese companies, such as Alibaba and Tencent, to launch their own models, claiming superior performance.

On the other hand, this competition has driven American companies to adjust their strategies by offering lighter models and more affordable access tiers.

Call for Testing

Meanwhile, the R1 update drew the attention of observers, especially since DeepSeek initially did not disclose many details about it, unlike the initial launch which was accompanied by a detailed research paper.

In this context, reports indicated that a company representative described the update as a “simple experimental upgrade” and invited users to begin testing it. However, some in the United States view DeepSeek’s technology as a potential national security risk.

Looking ahead, it is still widely anticipated that the company will unveil the R2 model, which is the successor to R1, and was originally planned for release in May, according to earlier reports.