Alibaba Qwen2.5-Omni: A Multimodal AI Model for Your Phone

Alibaba Group has entered the fray with a significant new release in the artificial intelligence arena: Qwen2.5-Omni-7B.

This isn’t just another large language model; it’s a versatile, multimodal system designed to understand and process a mix of text, images, audio, and video.

Perhaps most notably, it’s built to run efficiently directly on consumer devices like smartphones and laptops, and Alibaba has made it freely available as open-source software.

What Exactly is Qwen2.5-Omni?

Qwen2.5-Omni-7B is the latest flagship model in Alibaba’s “Qwen” AI series.

As a multimodal model, it can accept and interpret various data types simultaneously.

With a relatively compact size of 7 billion parameters, it’s optimized for deployment on edge devices, meaning it can operate locally without constant reliance on powerful cloud servers, while still delivering robust performance.

Its open-source nature invites developers worldwide to utilize and build upon it.

Key Capabilities of Qwen2.5-Omni

The new model boasts several features that set it apart:

Comprehensive Multimodal Understanding

It seamlessly processes diverse inputs – text, images, audio, and video – allowing for more nuanced interactions.

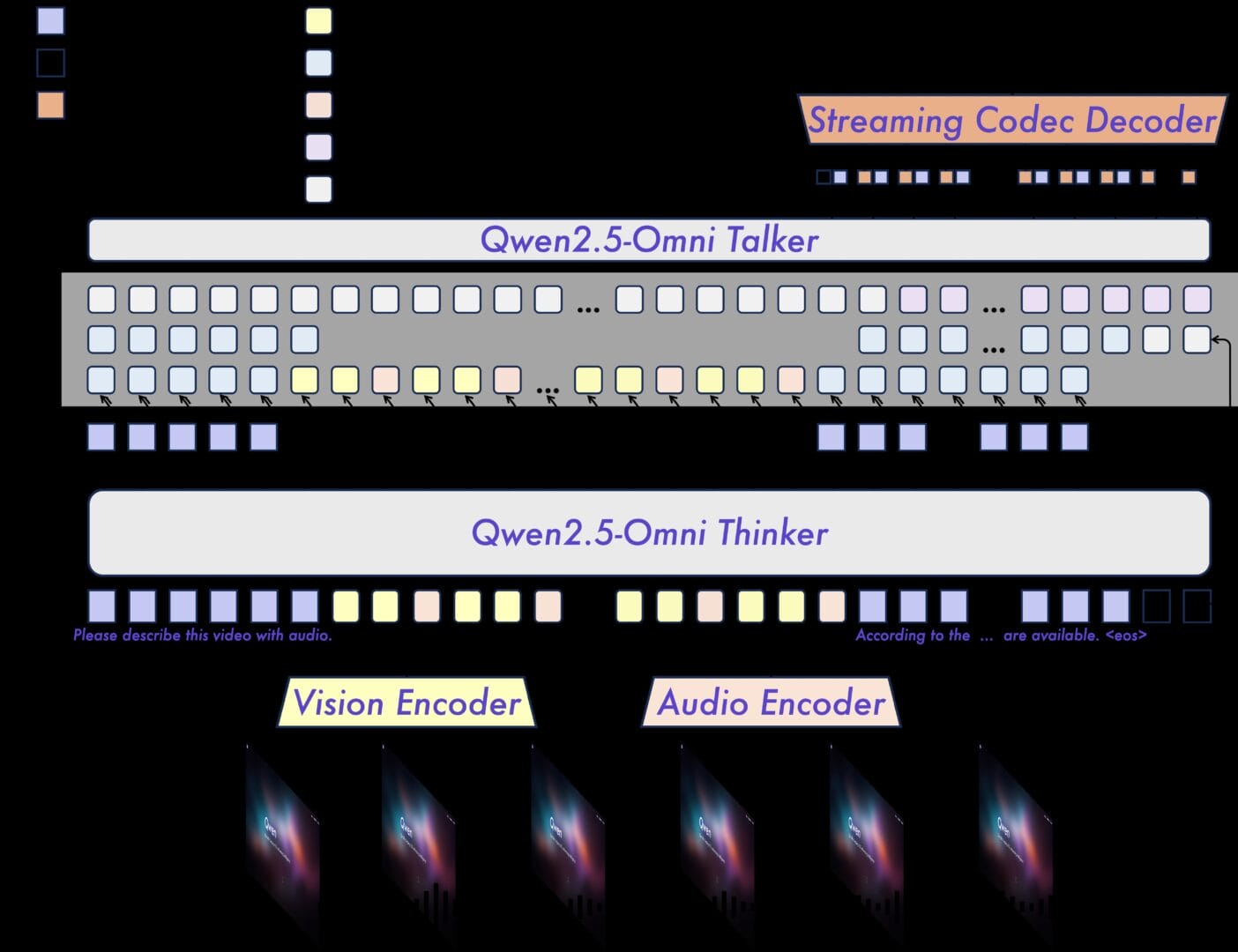

Innovative “Thinker-Talker” Architecture

Underpinning its abilities is a novel architecture.

The “Thinker” component acts like the brain, processing and understanding the varied inputs.

While the “Talker” component functions like the mouth, taking the processed information from the Thinker and generating real-time streaming responses as text or natural-sounding speech.

Precise Audio-Visual Synchronization

It introduces a new technique called TMRoPE (Time-aligned Multimodal RoPE) specifically designed to synchronize timestamps between video frames and accompanying audio tracks.

Real-Time Interaction

The architecture supports chunked input and immediate output, making it suitable for truly real-time voice and video chat applications.

Natural and Robust Speech Generation

Alibaba highlights the model’s ability to produce high-quality, natural-sounding speech, claiming it surpasses many existing alternatives in robustness.

Effective Instruction Following (Voice & Text)

Benchmarks indicate it follows instructions given via voice input nearly as effectively as text commands.

Performance on Standard Benchmarks

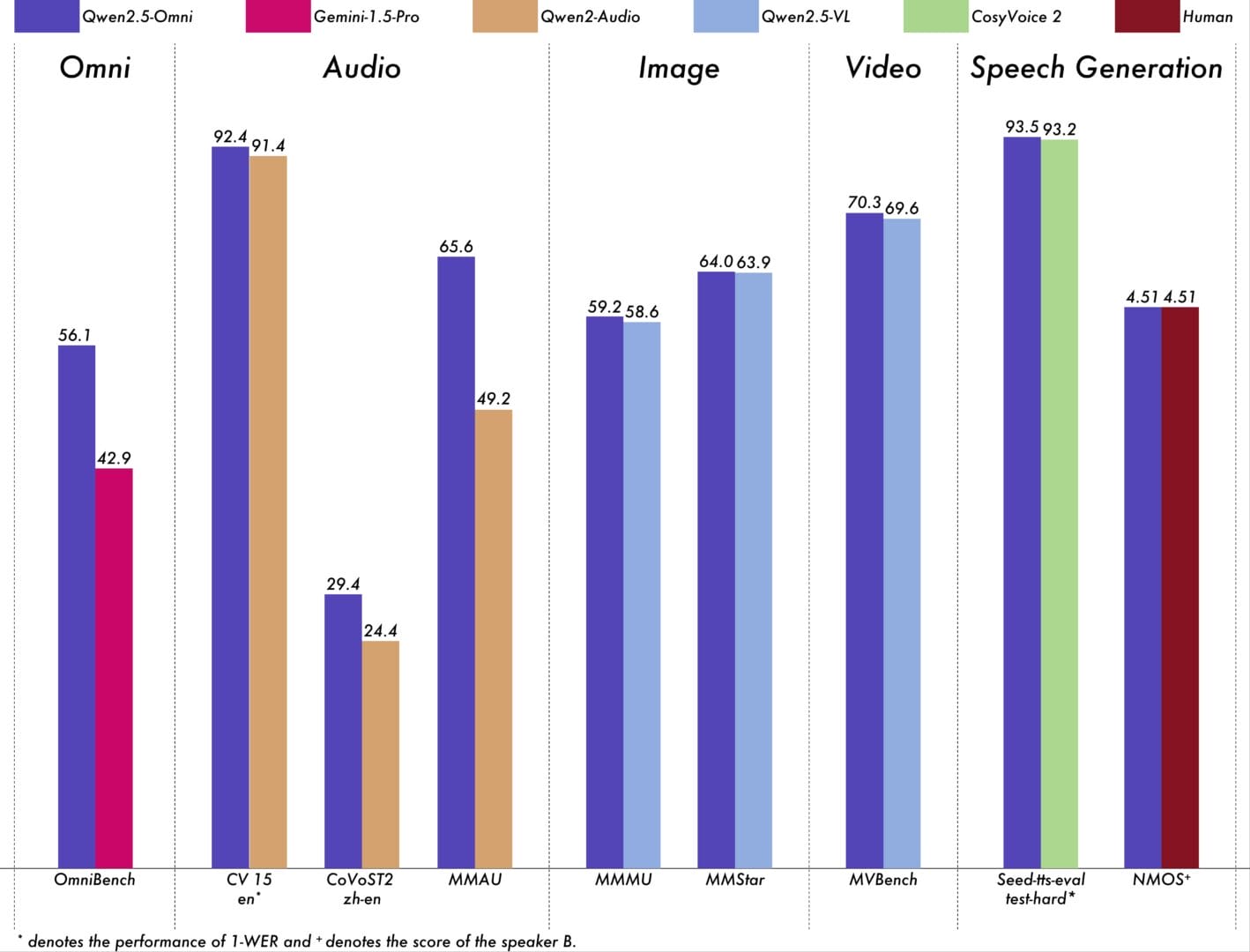

Alibaba backs its claims with strong benchmark results. According to the company’s published data:

On the multimodal OmniBench test, Qwen2.5-Omni scored 56.1, significantly higher than Google’s Gemini-1.5-Pro (42.9).

In audio tasks like the CV15 benchmark, it outperformed Alibaba’s previous Qwen2-Audio model.

For image-related understanding tasks (e.g., MMMU benchmark), its performance is comparable to specialized vision-language models like Qwen2.5-VL-7B.

Open Source Access and Efficiency

A key aspect of this release is its open-source availability.

Developers can freely access Qwen2.5-Omni on popular platforms like Hugging Face, GitHub, ModelScope (Alibaba’s platform), and DashScope.

This accessibility, combined with its ability to run locally on devices, promotes wider innovation and addresses user privacy concerns, as data processing can happen on the user’s machine rather than being sent externally

Navigating the Competitive AI Landscape

The launch of Qwen2.5-Omni occurs amidst fierce global and domestic competition in China’s AI sector.

The “DeepSeek moment,” referring to the impact of rival DeepSeek’s open-source models, has spurred companies like Alibaba and Baidu to release increasingly capable and cost-effective models at an unprecedented pace.

This move aligns with Alibaba’s broader AI strategy, backed by a planned $53 billion investment in cloud computing and AI infrastructure over the next three years.

The company is positioning itself not just as a model developer but also as a key infrastructure provider.

Strategic partnerships further underscore this ambition, including collaborations with Apple for AI integration on iPhones in China and with BMW to embed its AI into next-generation vehicles.

Future Development Plans

Alibaba intends to continue refining Qwen2.5-Omni.

Future goals include enhancing its ability to follow voice commands with even greater precision and improving its collaborative understanding of combined audio-visual information.

The long-term vision aims to integrate more modalities, moving closer to a truly comprehensive “omni-model.”

Frequently Asked Questions (FAQ)

Is Qwen2.5-Omni free to use?

Yes, the model is open-source and available free of charge for developers and researchers on platforms like Hugging Face, GitHub, and ModelScope.

You can also interact with it via the company’s “Qwen Chat” interface.

Explore access methods on the model page.

What makes Qwen2.5-Omni special?

Its combination of handling diverse data types (text, image, audio, video), efficient design for mobile/edge devices, and its open-source nature are key differentiators. The “Thinker-Talker” architecture is also a notable innovation.

Can Qwen2.5-Omni run without an internet connection?

Yes, it’s designed for local execution on devices, which enhances user privacy and allows for offline operation once downloaded.